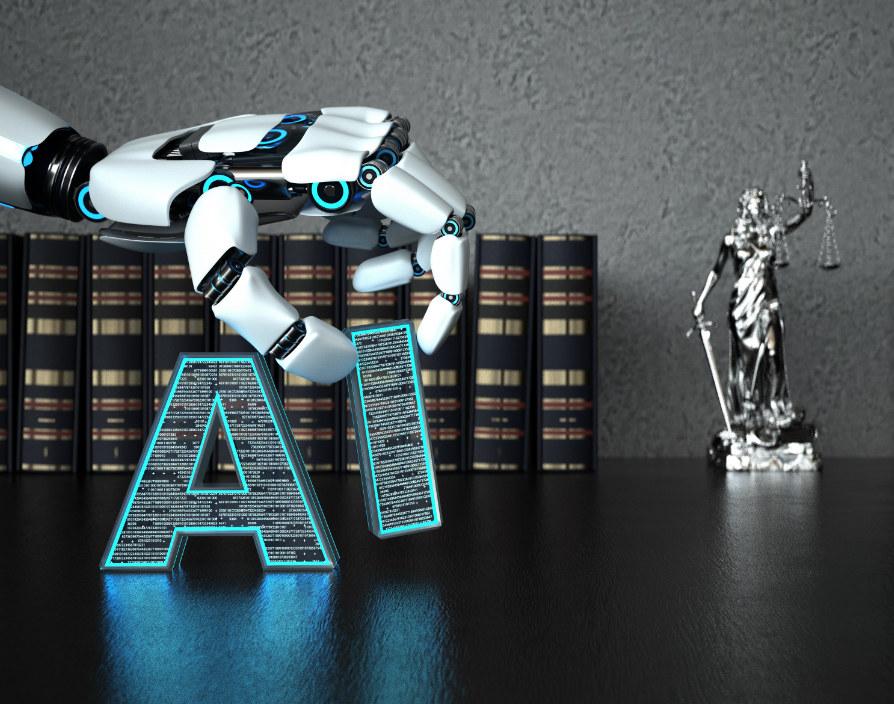

Addressing inequality has become one of the top priorities of many organisations, not only businesses but also public bodies. Artificial intelligence (AI) has long been praised by legal and tech experts as crucial to eliminate discrimination in businesses. However, concerns have arisen from use of certain AI algorithms that may actually result in bias and discrimination. In fact, the recent guidance by the Equality and Human Rights Commission (EHRC) focuses on how AI systems may cause discriminatory outcomes and how interventions should be made to avoid breach of equality law.

This article analyses the importance of equality and the impacts of AI on combatting discrimination.

Equality & discrimination

The Equality Act 2010 protects a person from being discriminated and/or victimised because they possess one or more of the protected characteristics, one of which is sexual orientation. Hence, under the law, you cannot be discriminated, harassed, or victimised because of whom you are heterosexual, homosexual or bisexual. Both direct and indirect discrimination are prohibited, e.g., an organisation cannot refuse services to anyone because of their sexual orientation. The law also forbids discrimination by association whereby a person is treated less favourably because of the sexual orientation of someone they know, e.g., a family member or friend.

The Act has a wide application not only to the private sector but also to public sector, including employment, suppliers, service providers and public services such as healthcare, education, transport and utilities.

Promoting equality in any organisation makes sense from both business/public service perspectives and ethical standpoint because it not only helps staff and customers feel respected but it also ensures the proper functioning of such organisation.

Artificial intelligence

The term ‘AI’ can be used to refer to a wide range of technologies used in private and public sector including artificial intelligence, machine learning and automated decision-making.

Generally, AI means using computer algorithms to support decision-making or the delivery of services and information. In other words, it involves programming computers to process large volumes of data and ‘learn’ to answer questions or perform specific actions. As a result, AI is often regarded as capable of making more informed and consistent decisions without being affected by human error and bias.

Can AI deepen inequality?

Building an AI system is a complicated process and without an in-depth understanding, the use of AI may lead to discrimination and deepen inequalities.

Whilst AI has come a long way since Amazon’s internal HR machine learning tool which was discovered to have a significant bias against women, it has by no means completely eradicated the fundamental issues of bias in AI. In particular, AI is ‘trained’ to make decisions or perform other actions based on the data it has, which can be fundamentally bias. In addition, bias may occur from the ways the AI system is developed and programmed, or the people developing and programming it. Further, the bias may ‘deepen’ or develop over time as the system is used and implemented.

Therefore, bias in AI is a significant issue that cannot be easily solved as it goes to the heart of the technology itself. Whilst there has been a big push in recent times to try and address bias in AI, the issue is deep rooted in the data that underpins the technology. More importantly, the bias itself is rarely deliberate and may not always be readily identifiable. For this reason, there is a significant focus on AI and the ethics behind the same, especially when it comes to equality.

Organisations using AI should consider the following measures to help avoid bias and breach of equality law:

- Priority should be given to understanding the AI system being implemented, such as how it works or makes decisions, and training their staff to do the same. AI should not not blindly relied upon but viewed with a critical lens and appreciation that there is the risk of inherent inequalities in the underpinning data. It is fundamental that organisations remain acutely aware that AI systems and its ethical underpinnings may not be aligned not only to their ethics but also the current legislative position in the UK. Whilst it may have numerous benefits, such as time and cost efficiency, AI should not overshadow human interventions and appropriate checks and measures should be firmly in place. AI should for the time being be used as a tool of organisations to help with certain processes, not yet to replace human decision-makers and staff.

- Organisation should be critically examining their use of AI and have sufficient checks and measures to ensure that there is no discrimination, causing the organisation to breach its standards and legislative obligations. AI system should be properly tested, a keen eye kept on the results and efficient checks and measures implemented. Any complaint should be properly investigated. Organisation should also ensure that their policies make it very clear if there is any automated decision-making process used and that they are compliant with relevant data protection rules. Ultimately the responsibility and potential liability are not with the AI system but the organisation using the AI. Whilst there may be some legal recourse against the AI system depending on the contractual terms, any investigations, claims or actions will be against the organisation, which may cause monetary losses and reputational damage.

- Organisations should also keep up to date with all relevant legislation and guidance such as those in relation to equality and data protection. The EHRC guidance is helpful as it gives practical examples of how AI systems may be causing discriminatory outcomes and suggests ways to help organisations avoid breaches of equality law. The Information Commissioner’s Office (ICO) has also published guidance on AI and data protection, which sets out the best practices as recommended by ICO to ensure AI systems’ compliance with data protection law as well as equality legislation.

Related links:

https://www.equalityhumanrights.com/en/advice-and-guidance/artificial-intelligence-public-services

Share via: